President Biden Signs Sweeping Artificial Intelligence Executive Order

On October 30, 2023, President Joe Biden issued an executive order (EO or the Order) on Safe, Secure, and Trustworthy Artificial Intelligence (AI) to advance a coordinated, federal governmentwide approach toward the safe and responsible development of AI. It sets forth a wide range of federal regulatory principles and priorities, directs myriad federal agencies to promulgate standards and technical guidelines, and invokes statutory authority — the Defense Production Act — that has historically been the primary source of presidential authorities to commandeer or regulate private industry to support the national defense. The Order reflects the Biden administration’s desire to make AI more secure and to cement U.S. leadership in global AI policy ahead of other attempts to regulate AI — most notably in the European Union and United Kingdom and to respond to growing competition in AI development from China.

The Order builds on momentum from voluntary commitments made by over a dozen leading technology companies earlier this year in meetings with the White House and Secretary of Commerce regarding security testing and the identification of AI-generated content. Reiterating statements made by the White House at the time of the voluntary commitments in July and September 2023, the Order calls for a “society-wide effort that includes government, the private sector, academia, and civil society” to realize the benefits of AI while mitigating risks. The Order states that “irresponsible use could exacerbate societal harms such as fraud, discrimination, bias, and disinformation; displace and disempower workers; stifle competition; and pose risks to national security.” While preserving innovation is a key White House policy objective, the Order offers relatively little by way of promoting innovation in the AI industry, focusing primarily in terms of innovation on developing, recruiting, and otherwise drawing in new AI talent.

The EO directs federal agencies to both use AI safely and encourage the safe use of AI by the private sector. Beyond specific premarket testing and reporting requirements that will apply to certain AI developers, much of the “regulatory” impact of the EO will arise from the vast array of technical standard-setting and guidance to be generated over the next year by several executive departments and independent agencies. Such standards will also, by design, seep into the private sector through the government’s purchasing power, and much of the guidance has the potential to reach beyond technology companies and government vendors. The EO directs several sectoral regulatory authorities, including the Departments of Commerce, Energy, Health and Human Services, Homeland Security, Transportation, and Education, as well as the Copyright Office and U.S. Patent and Trademark Office, to address risks and potential benefits from the use of AI in financial services, healthcare, biotechnology, energy, transportation, telecommunications, intellectual property, competition, labor, education, housing, law enforcement, consumer protection, cybersecurity, national security, privacy, and trade. Indeed, the Office of Management and Budget (OMB) has already issued draft guidance to assist in the implementation of AI governance, standards, and safeguards under the new EO.

Companies should closely monitor activity from the various regulators encouraged or tasked with specific actions over the next several months under the EO for new obligations. For example, the Treasury Department is directed to issue a public report on best practices for financial institutions to manage AI-specific cybersecurity risks. The EO “encourages” independent regulatory agencies — such as the Federal Trade Commission, Consumer Financial Protection Bureau, and Federal Communications Commission — to take numerous, specified actions under the Order. Companies that develop AI technologies also can expect new testing, auditing, and information-sharing obligations prior to bringing new AI technologies to production, particularly where they provide technologies to the federal government. The EO provides the Secretary of Commerce with extensive responsibilities to set technical standards and reporting obligations, built on the existing work from the National Institute for Standards and Technology, including NIST AI 100-1. The Order directs the Patent and Trademark Office and Copyright Office to consider intellectual property, copyright, and inventorship issues raised by the use of AI. Finally, while the Order generally carves out military and intelligence applications from its directives, additional regulations and guidance may follow from an interagency National Security Memorandum on AI ordered by the President.

President Biden’s remarks prior to signing the EO acknowledged that “[t]he order is about making sure that AI systems can earn American people’s trust and trust from people around the world.” To help build that trust, the EO seeks to develop standards and means to identify AI-generated content for the public, battling risks from deepfakes and taking into account intellectual property concerns. The EO also emphasizes that privacy considerations — including with respect to data collected and used to develop AI technologies — are critical to maintaining trust. President Biden again called on Congress to pass bipartisan data privacy legislation that addresses AI risks and highlighted the work of Senate Majority Leader Charles Schumer, D-New York, to develop comprehensive AI legislation with bipartisan support.

The EO establishes a new governing model for AI in the federal government. The Order, through the OMB implementation guidance, will establish new chief AI officers throughout the executive branch. It also creates a new White House AI Council chaired by the Assistant to the President and Deputy Chief of Staff for Policy and populated by key Cabinet members and other functional leaders within the administration. The White House AI Council will help coordinate the regulatory, reporting, and other AI-related government activity that the President has mandated under the EO. These structures are likely to contribute to an enduring focus on AI and broader technology risk management within federal government as well as provide an important AI governance model for the private sector.

Key Directives

Ensuring AI Safety and Security via the Defense Production Act

The longest section of the EO is devoted to mitigating AI risk in the context of national security and critical infrastructure. Notably, the order delegates to the Secretary of Commerce certain authority to mandate reporting regarding potential AI models to the Department of Commerce under the Defense Production Act (DPA), as amended, 50 U.S.C. 4501 et seq. Invoked by both President Biden and former President Donald Trump in response to the Covid-19 pandemic, the DPA empowers the Executive to assert broad authority over private industry.

Pursuant to that authority, the EO directs the Secretary of Commerce, in consultation with the Secretaries of State, Defense, and Energy and the Director of National Intelligence to adopt reporting requirements for potential “dual-use foundation models,” those high-performance models that exhibit or could be modified to pose a serious risk to national security, national economic security, or national public health and safety. Companies developing or demonstrating the intent to develop dual-use foundation models will be required to report to the federal government how they train and test these models, including results of the red-team testing (adversarial testing for risks and bad behavior in AI models) the company has conducted. Companies will also be required to provide the federal government with specific information about any large-scale computing cluster, including reporting any such acquisition development, or possession, including the existence, power, and location of these clusters. The Department of Commerce published a press release shortly after the EO, noting that the Bureau of Industry and Security will invoke the DPA to require companies that have developed dual-use foundation models to report to the Department of Commerce the results of their red-teaming, as required by the EO.

The National Institute of Standards and Technology (NIST) has been tasked to develop guidelines for these red-teaming tests. Per the Department of Commerce press release, NIST will “develop industry standards for the safe and responsible development of frontier AI models, create test environments to evaluate these systems, and develop standards on privacy and on authenticating when content is AI-generated.” The Department of Homeland Security will incorporate those standards as appropriate into guidelines for critical infrastructure sectors and establish the AI Safety and Security Board.

Emphasizing the importance of protecting critical infrastructure, the EO directs the head of each agency with relevant regulatory authority over critical infrastructure and the heads of relevant sector risk management agencies to collaborate with the Director of the Department of Homeland Security’s Cybersecurity and Infrastructure Security Agency to assess potential risks associated with the use of AI in critical infrastructure sectors. This includes identifying ways that the deployment of AI could increase the susceptibility of critical infrastructure systems to physical attacks, cyberattacks, and critical failures and considering ways to mitigate these vulnerabilities. It also directs certain agencies in charge of crucial sectors, such as the financial sector, to issue reports on best practices to mitigate AI-specific security risks for regulated entities within 90 days.

On the national security front, the Order directs the Assistant to the President for National Security Affairs and the Assistant to the President and Deputy Chief of Staff for Policy to oversee the development of an interagency national security memorandum on AI.

Privacy

Recognizing that government can be both a supplier and procurer of personal data relevant to AI deployment, the EO directs the OMB Director, in consultation with the Federal Privacy Council and the Interagency Council on Statistical Policy, to evaluate agency standards and procedures regarding the collection, processing, storage, and dissemination of commercially available information that contains personally identifiable information. The EO also seeks federal support to develop and implement measures to strengthen privacy-preserving research and technologies and create guidelines that agencies can use to evaluate privacy techniques used in AI. The EO also calls out independent regulatory agencies to use existing authorities to protect American consumers from threats to privacy — a call that the Federal Trade Commission has repeatedly made clear it is ready to answer, having already issued multiple guidance documents on the use of AI and algorithms. In addition to scrutiny of companies that develop AI technologies, privacy and consumer protection scrutiny may extend to companies’ selection and oversight of vendors of AI technology. This is emphasized through the EO’s call for regulators to “clarif[y] the responsibility of regulated entities to conduct due diligence on and monitor any third-party AI services they use.”

Equity and Civil Rights

The Biden administration also directed agencies to combat algorithmic discrimination. The EO calls for guidance to landlords, federal benefits programs, and federal contractors to prevent AI from being used to exacerbate discrimination; collaboration between agencies on training and technical assistance for investigating and prosecuting civil rights violations related to AI; and best practices for using AI in the criminal justice system to improve efficiency in a fair and equitable manner.

The Order encourages the Directors of the Consumer Financial Protection Bureau and Federal Housing Finance Agency to exercise their existing authorities to combat discrimination and biases against protected groups in consumer financial and housing markets.

Consumers, Patients, Students, and Workers

The EO contemplates that AI’s benefits to consumers should be balanced with the risks it poses, including by injuring or misleading Americans. The EO directs the Department of Health and Human Services to establish a safety program that collects data on and addresses harms or unsafe healthcare practices involving AI. Independent agencies — such as the Federal Trade Commission and Consumer Financial Protection Bureau — are encouraged to ensure that existing consumer protection laws and principles are applied to AI and enact new safeguards to protect consumers from AI-related harms such as fraud, bias, discrimination, privacy infringements, and safety risks, particularly in critical sectors such as healthcare, financial services, education, housing, law, and transportation.

The EO also recognizes that the labor market is being affected by AI, with risks from workplace bias, surveillance, and job displacement. The EO calls for the development of principles and best practices to benefit workers, including the production of a report on AI’s potential labor-market effects.

Intellectual Property

The Order acknowledges that the use of AI raises important new intellectual property (IP) issues. It instructs the Director of the Patent and Trademark Office to publish guidance to patent examiners and applicants addressing inventorship and the use of AI and other issues at the intersection of AI and IP. The Order also directs the Patent and Trademark Office and Copyright Office to provide recommendations to the President on potential executive actions related to copyright and AI, including on the scope of protection for works produced using AI and the treatment of copyrighted works in AI training. Finally, the Order directs the Departments of Homeland Security and Justice to develop training, guidance, and other resources to combat and mitigate AI-related IP risks, including AI-related IP theft and other IP risks.

Innovation and Competition

To try to develop innovative and trustworthy AI systems, the EO directs federal agencies to promote fair competition in the AI market and support the development of AI standards. Importantly, it encourages the Federal Trade Commission to exercise its authorities, including its rulemaking authority to regulate unfair and deceptive practices under the Federal Trade Commission Act, 15 U.S.C. 41 et seq., to protect both consumers and workers.

To increase the AI talent available to the industry and to the federal government, the Order directs the Secretaries of State and Homeland Security to make a number of changes to the visa process to attract and retain talent in AI. The Order also mandates the creation of an AI and Technology Task Force with the purpose to accelerate and track the hiring of AI talent across the federal government and directs agencies to implement AI training and familiarization programs. And the Order provides for the creation of a pilot program for the National AI Research Resource, a National Science Foundation Regional Innovation Engine, and four National AI Research Institutes.

American Leadership Around the World

The EO commits the United States to working with other nations to advance the responsible development and use of AI. It also directs federal agencies to support international efforts to develop AI standards and norms and to engage with other nations on the responsible use of AI in areas such as national security and international trade.

Responsible and Effective Government Use of AI

The EO seeks clear standards and guidance for federal agencies in their use of AI. It requires that each agency designate a chief artificial intelligence officer to coordinate its use of AI. It also directs agencies to facilitate governmentwide acquisition of AI services and products and to seek additional AI talent and provide AI training for employees at all levels in relevant fields. In particular, the EO sets forth the grounds of a model policy statement on generative AI, stating:

As generative AI products become widely available and common in online platforms, agencies are discouraged from imposing broad general bans or blocks on agency use of generative AI. Agencies should instead limit access, as necessary, to specific generative AI services based on specific risk assessments; establish guidelines and limitations on the appropriate use of generative AI; and, with appropriate safeguards in place, provide their personnel and programs with access to secure and reliable generative AI capabilities, at least for the purposes of experimentation and routine tasks that carry a low risk of impacting Americans’ right. To proteglen ct Federal Government information, agencies are also encouraged to employee risk-management practices, such as training their staff on proper use, protection, dissemination, and disposition of Federal information; negotiating appropriate terms of service with vendors; implementing measures designed to ensure compliance with record-keeping, cybersecurity, confidentiality, privacy, and data protection requirements; and deploying other measures to prevent misuse of Federal Government information in generative AI.

The OMB has already released draft guidance for other agencies on the implementation of certain directives in the Order and is seeking public input. The draft guidance is structured around strengthening AI governance, advancing responsible AI innovation, and managing risks from the use of AI by agencies. Among the direction to agencies is a call to remove unnecessary barriers to the responsible use of AI, including barriers related to inadequate IT infrastructure, datasets, cybersecurity authorization practices, and AI talent.

Steps Ahead

The EO imposes a series of deadlines on federal agencies to issue reports and AI guidelines for a variety of sectors within the coming months. Further, the Biden administration is representing the United States’ commitment to establishing guardrails around the use of AI globally. In a policy address at the U.S. Embassy in London on Wednesday, Vice President Kamala Harris announced a new AI Safety Institute in the Department of Commerce, new draft guidance on the federal government’s use of AI, and a declaration joined by 27 other nations and the EU working to understand the risks and opportunities of AI and establish a “set of norms for responsible development, deployment and use of military AI capabilities.” She also attended a summit on artificial intelligence in the United Kingdom on Thursday, November 2. Additionally, the Group of Seven industrial countries announced a voluntary code of conduct for companies developing advanced AI systems on the same day that the EO was signed by President Biden. The code of conduct is expected to require companies to mitigate potential societal harm from their AI systems; implement robust cybersecurity controls; and establish risk management systems to mitigate the potential misuse of AI. The Order and the remarks by the Biden administration seek to position the U.S. as a leader in AI policy and regulation while not stifling innovation.

Congress could be the biggest obstacle to creating new rules. This EO has the force of law, and some executive agencies are confident that they have the power to effectively implement it and set AI policy. However, in other cases, particularly for privacy, there could be a limit to what an EO can achieve unless Congress enacts legislation.

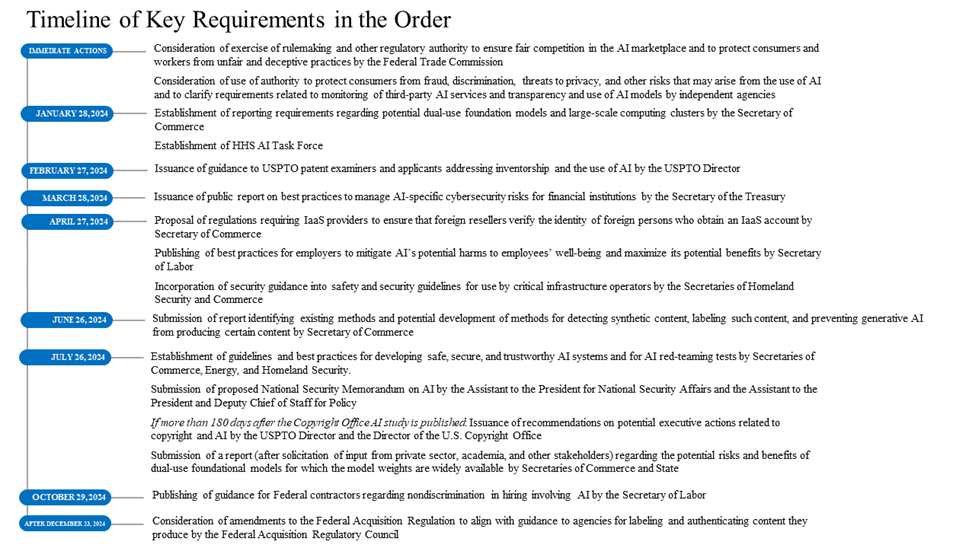

Timeline of Key Requirements in the Order

For more information on the key business and legal questions that companies and their boards should consider as they navigate the challenges and opportunities presented by artificial intelligence, please click here.

This post is as of the posting date stated above. Sidley Austin LLP assumes no duty to update this post or post about any subsequent developments having a bearing on this post.